Z-order in 8D

The indexes based on self-similar curves swept suitable not only for search of spatial data. They are efficient and heterogeneous data laid out on the integer lattice.

Under the cut we'll verify use Z-curve to implement the 8-dimensional index with an eye on the the OLAP cube.

the

summary of the previous series.

Previously (1, 2, 3, 4, 5), it was shown that the index of the Z-curve is quite suitable for the spatial search. It is very close in speed (in the absence of restrictions on the buffer cache) to the traditionally used R-tree, but much smaller, faster construction, not afraid of random data, fewer reads in the search.

the

Concerns.

Technically, there is nothing difficult in implementing such an index there, it took only to do processing of 8-dimensional key. Dimension 8 was selected from technological considerations, if you need a 7-dimensional index, we can safely complete it with zeros for one of the coordinates, the speed is almost not affected, except that the key will become longer. If necessary, nothing prevents to make longer, such as 16-dimensional key.

It is worth to think in advance, what pitfalls can be expected and what to do with it.

the

- How about the accuracy? In the current implementation allowed up to 31 digits to encode the values. If data (floating point) obtained in a natural way to encode likely be enough and 20 bits. There are certainly 32-bit ADC, but it is rather exotic. It is clear that double contains 64 bits, but most of the data without loss (or with minimal losses) can be planted in 31-bit bars. If not, the data were processed, such as averaged.

If necessary, the resolution of the described index may be increased. This will cause swelling of the key, and performance degradation. However, while page placed more than one key, they can be organized as B-tree.

On the other hand, a small loss of accuracy is not essential in our case. After all, we are searching interval on each dimension. To obtain the correct result, it is sufficient to take the interval with a margin and remove the extra post-filtering.

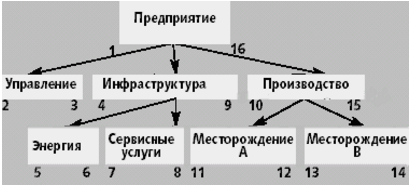

the - Data can have a hierarchical nature. For example, the number encodes the sensor, but the sensors are grouped, their group will form its own hierarchy. And I want to make a selection for a branch of this hierarchy. This problem resolved bypass, and the numbering of tree nodes, in that order. Then, each tree node has the interval of values is its own ID to the ID of your last child.

And the intervals is exactly what we need to search for.

Will experiment with a pseudo-random data. But the real data has internal dependencies that are highly dependent on specific tasks and hard to provide. Yes, it is, as our modeling of pseudo-random data will actually be 6-dimensional and two-column just duplicate.

In General, this problem is fundamental for multidimensional R-trees, which have heuristics, splitting/merging pages. If the dependencies in the data do not fit the heuristic, there can begin to work counter-productive, which affects the build time/work and on the size of the index.

For ordinary B-tree, upon which we built our index, this problem is not so terrible — the tree itself follows the balance.

In reality, different dimensions have different scales, the maximum extent can be flattened in one dimension and elongated in others. Yes, of course, but again, it's pretty important when constructing multidimensional R-trees and much less critical in the case of B-trees.

the

Multidimensional rectangles have a developed surface and encoding the Z-curve will be very inefficient. There are some concerns, at the same time check.

Benchmark

The following table summarizes the results for several (described below) request types.

Data — 100 000 000 random points uniformly distributed in the 8-dimensional cube with sides [0...1 000 000].

Measurements (as before) was performed on a virtual machine with two cores and 4 GB of RAM, so the times are not absolute values, but the numbers of pages read is still possible to trust.

The times shown in the second runs, on a heated server and virtual machine. The number of read buffers — into the fresh-raised server.

-

the

- That commit: (SHA-1: 36dd...76c7)

the - gawk -f gendata.awk 〉 data.csv

theBEGIN{ for (i = 0; i < 100000000; i++) { x = int(1000000 * rand()); y = int(1000000 * rand()); z = int(1000000 * rand()); a = int(1000000 * rand()); b = int(1000000 * rand()); c = int(1000000 * rand()); print "{"x","y","z","a","b","c","a","b"}"; } }notice the “a” and “b” appear twice, the data are degenerate, this dimension is 6

the create table test_points_8d (p integer[8]);

the COPY test_points_8d from '/home/.../postgresql/contrib/zcurve/data.csv';

10 minutes

the - \dt+

theSchema | Name | Type | Owner | Size | Description -------+----------------+-------+----------+---------+------------- public | test_points_8d | table | postgres | 8056 MB |

the create index zcurve_test_points_8d on test_points_8d (zcurve_num_from_8coords (p[1], p[2], p[3], p[4], p[5], p[6], p[7], p[8]));

15 minutes

the - \di+

theSchema | Name | Type | Owner | Table | Size | --------+-----------------------+-------+----------+---------------+-------+--- public | zcurve_test_points_8d | index | postgres | test_points_8d | 4743 MB

the - validation request

returns theselect c, t_row from (select c, (select p from test_points_8d t where c = t.ctid) from t_row zcurve_8d_lookup_tidonly('zcurve_test_points_8d', 237000,291000,845000,152000,585000,193000,152000,585000, 247000,301000,855000,162000,595000,203000,162000,595000) as c) x;

c | t_row

------+-----------------------------------------------------------

(0,1) | {237787,291065,845813,152208,585537,193475,152208,585537}

as required

| request Type | NPoints | Nreq | Time(ms) | Reads | Shared Hits |

|---|---|---|---|---|---|

| all 10,000 |

1.1 e-4 | 100 000 | .46 | 2.0175 | 15.3919 |

| all 100 000 |

73.9 | 10 000 | 47 | 57.3063 | 1604.18 |

| 3X100 000 + 5X10 000 |

0.0837 | 10 000 | .9 | 8.7637 | 73.8852 |

| 2Х100 000 + 6 × 10 000 |

0.0096 | 10 000 | .66 | 5.1771 | 40.9089 |

| 1 whole + 1X100 000 + 6X10 000 |

0.0978 | 10 000 | 1.5 | 24.2122 | 199.33 |

| 2 whole + 6X10 000 |

0.9663 | 10 000 | 95 | 115.202 | 1050.8 |

request Type — one of the following

the

-

the

- all 10 000. The direction of the General extent of 1,000,000 requests in the form of a cube with sides of 10 000, the beginning of the queries evenly distributed inside the total extent. Side query is 1E-2 of the maximum. Since we have 6 independent parameters, we obtain 1E-12 of the total volume. The number of points — 1E8, then the theoretical probability to find a point in that the extent of approximately 1E-4 the

- by 100 000. The extent of the query in a cube with sides of 100 000 is 1e-1. Since we have 6 independent parameters, we get 1e-6. The number of points — 1e8, then the average number of points in this request 100. On the other hand, the probability to get out beyond the extent of one-coordinate — 10%, for any of the 6 — 53% (0.9**6), if the average velezinee 5%, then the probability 0.735 (0.95**6), which is consistent with the obtained value of

- 2Х100 000 + 6 × 10 000. Requests tapered in 6 dimensions

- 1 whole + 1X100 000 +6X10 000. Requests tapered for 6 dimensions, one dimension is taken as a whole, one is 100 000. Simulated the situation when one of the values is unknown, you have to take the full interval on it. the

- 2 whole + 6X10 000. The two values taken as a whole, aggravating the situation with the strangers.

000 3X100 + 5X10 000. Requests tapered in 5 dimensions the

NPoints — the average number of points found in the request

Time(ms) — average time of query execution

Reads — the average number of readings by request

Shared Hits — number of hits to the cache buffer

the

Insights

As expected, an important role in the cost of a query plays the square of the perimeter of the query. In fact, the larger the perimeter, the potentially greater the number of pages he crosses, the more you need to read, the less the efficiency of the cache ...

However, for cubic queries, the query cost is quite acceptable. In fact, for the query — cube 100 000, on average, to read 57 pages 74 rows in the results. In order to read these 74 rows, you would probably have to read more about 74 pages.

In addition, the data obtained on uniformly distributed data, clustered data, it is expected that to obtain the same power output the size of the queries will be much less, as well as the number of pages read.

It would be interesting to see.

PS: on the cover image depicts octeract — 8-dimensional cube

PPS: thanks to Andrei Borodin (Antonica, ECx.) for the tip on the subject

Комментарии

Отправить комментарий